The fHMM package is an implementation of the hidden Markov model with a focus on applications to financial time series data. This vignette1 introduces the model and its hierarchical extension. It closely follows Oelschläger and Adam (2021).

The Hidden Markov Model

Hidden Markov models (HMMs) are a modeling framework for time series data where a sequence of observation is assumed to depend on a latent state process. The peculiarity is that, instead of the observation process, the state process cannot be directly observed. However, the latent states comprise information about the environment the model is applied on.

The connection between hidden state process and observed state-dependent process arises by the following: Let be the number of possible states. We assume that for each point in time , an underlying process selects one of those states. Then, depending on the active state , the observation from the state-dependent process is generated by one of distributions 2

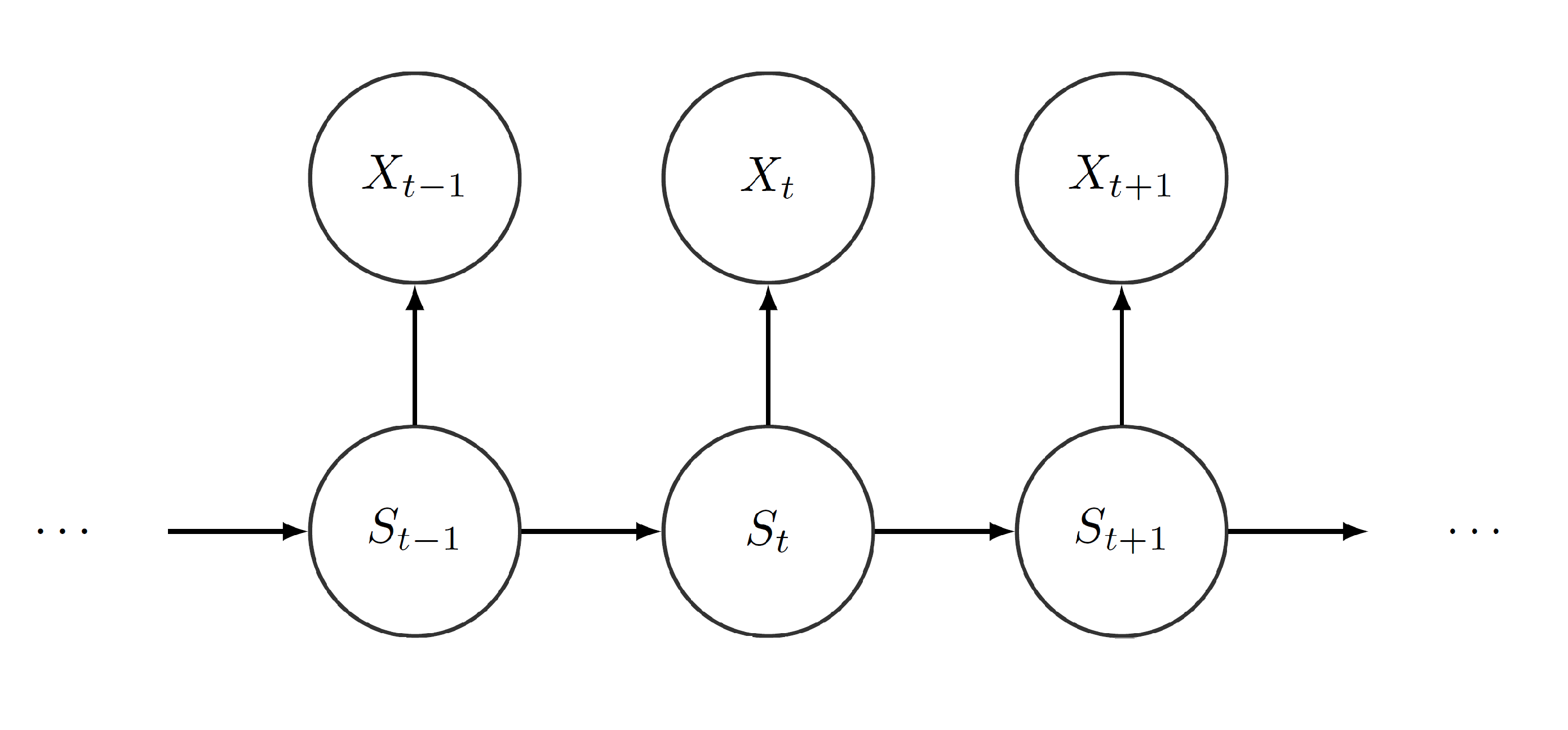

Furthermore, we assume to be Markovian, i.e. we assume that the actual state only depends on the previous state. Henceforth, we can identify the process by its initial distribution and its transition probability matrix (t.p.m.) . Moreover, by construction, we force the process to satisfy the conditional independence assumption, i.e. the actual observation depends on the current state , but does not depend on previous observations or states at all. The following graphic visualizes the dependence structure:

Referring to financial data, the different states can serve as proxies for the actual market situation, e.g. calm or nervous. Even though these moods cannot be observed directly, price changes or trading volumes, which clearly depend on the current mood of the market, can be observed. Thereby, using an underlying Markov process, we can detect which mood is active at any point in time and how the different moods alternate. Depending on the current mood, a price change is generated by a different distribution. These distributions characterize the moods in terms of expected return and volatility.3

Following Zucchini, MacDonald, and Langrock (2016), we assume that the initial distribution equals the stationary distribution , where , i.e. the stationary and henceforth the initial distribution is determined by .4 This is reasonable from a practical point of view: On the one hand, the hidden state process has been evolving for some time before we start to observe it and hence can be assumed to be stationary. On the other hand, setting reduces the number of parameters that need to be estimated, which is convenient from a computational perspective.

Adding a Hierarchical Structure

The hierarchical hidden Markov model (HMMM) is a flexible extension of the HMM that can jointly model data observed on two different time scales. The two time series, one on a coarser and one on a finer scale, differ in the number of observations, e.g. monthly observations on the coarser scale and daily or weekly observations on the finer scale.

Following the concept of HMMs, we can model both state-dependent time series jointly. First, we treat the time series on the coarser scale as stemming from an ordinary HMM, which we refer to as the coarse-scale HMM: At each time point of the coarse-scale time space , an underlying process selects one state from the coarse-scale state space . We call the hidden coarse-scale state process. Depending on which state is active at , one of distributions realizes the observation . The process is called the observed coarse-scale state-dependent process. The processes and have the same properties as before, namely is a first-order Markov process and satisfies the conditional independence assumption.

Subsequently, we segment the observations of the fine-scale time series into distinct chunks, each of which contains all data points that correspond to the -th coarse-scale time point. Assuming that we have fine-scale observations on every coarse-scale time point, we face chunks comprising of fine-scale observations each.

The hierarchical structure now evinces itself as we model each of the chunks by one of possible fine-scale HMMs. Each of the fine-scale HMMs has its own t.p.m. , initial distribution , stationary distribution , and state-dependent distributions . Which fine-scale HMM is selected to explain the -th chunk of fine-scale observations depends on the hidden coarse-scale state . The -th fine-scale HMM explaining the -th chunk of fine-scale observations consists of the following two stochastic processes: At each time point of the fine-scale time space , the process selects one state from the fine-scale state space . We call the hidden fine-scale state process. Depending on which state is active at , one of distributions realizes the observation . The process is called the observed fine-scale state-dependent process.

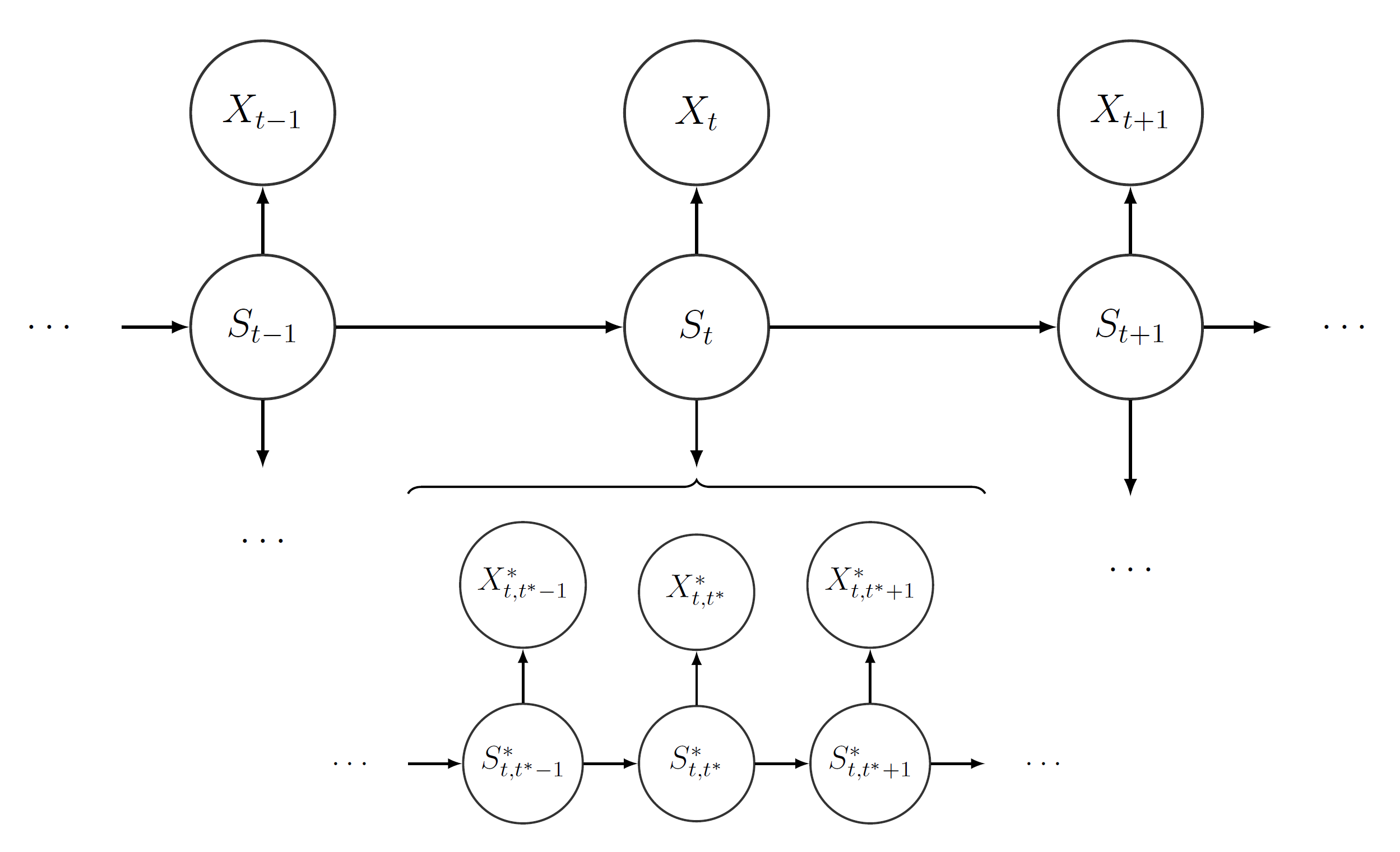

The fine-scale processes and satisfy the Markov property and the conditional independence assumption, respectively, as well. Furthermore, it is assumed that the fine-scale HMM explaining only depends on . This hierarchical structure is visualized in the following: