Modeling heterogeneity

Source:vignettes/v04_modeling_heterogeneity.Rmd

v04_modeling_heterogeneity.RmdIn the vignette on the model definition, we pointed out that the probit model can capture choice behavior heterogeneity by imposing a mixing distribution on the coefficient vector. The implementation in RprobitB is explained in this vignette1.

Estimating a joint normal mixing distribution

The mlogit package (Croissant 2020) contains the data

set Electricity, in which residential electricity customers

were asked to decide between four hypothetical electricity suppliers.

The suppliers differed in 6 characteristics:

- their fixed price

pfper kWh, - their contract length

cf, - an indicator

locwhether the supplier is a local company, - an indicator

wkwhether the supplier is a well known company, - an indicator

todwhether the supplier offers a time-of-day electricity price (which is higher during the day and lower during the night), and - an indicator

seaswhether the supplier’s price is seasonal dependent.

This constitutes a choice situation where choice behaviour

heterogeneity is expected: some customers might prefer a time-of-day

electricity price (because they may be not at home during the day),

while others can have the opposite preference. Ideally these differences

in preferences should be modelled using characteristics of the deciders.

In many cases (as in this data set) we do not have adequate information.

Instead these differences in taste can be captured by means of a mixing

distribution for the tod coefficient. This corresponds to

the assumption of a random coefficient from the underlying mixing

distribution to be drawn for each decider. We can use the estimated

mixing distribution to determine for example the share of deciders that

have a positive versus negative preference towards time-of-day

electricity prices.

Additionally, we expect correlations between the random coefficients

to certain covariates, for example a positive correlation between the

influence of loc and wk: deciders that prefer

local suppliers might also prefer well known companies due to

recommendations and past experiences, although they might be more

expensive than unknown suppliers. The fitted multivariate normal

distribution will reveal these correlations.

The following lines prepare the Electricity data set for

estimation. We use the helper function as_cov_names() that

relabels the data columns for alternative specific covariates into the

required format “<covariate>_<alternative>”,

compare to the vignette on choice data. Via the re

argument, we specify that we want to model random effects for all but

the price coefficient, which we will fix to -1 to interpret

the other estimates as monetary values.

data("Electricity", package = "mlogit")

Electricity <- as_cov_names(

Electricity, c("pf", "cl", "loc", "wk", "tod", "seas"), 1:4

)

data <- prepare_data(

form = choice ~ pf + cl + loc + wk + tod + seas | 0,

choice_data = Electricity,

re = c("cl", "loc", "wk", "tod", "seas")

)

model_elec <- fit_model(data, scale = "pf := -1", R = 1000)Calling the coef() method on the estimated model also

returns the estimated (marginal) variances of the mixing distribution

besides the average mean effects:

coef(model_elec)

#> Estimate (sd) Variance (sd)

#> 1 pf -1.00 (0.00) NA (NA)

#> 2 cl -0.26 (0.03) 0.30 (0.04)

#> 3 loc 2.82 (0.21) 7.03 (0.93)

#> 4 wk 2.05 (0.14) 3.83 (0.63)

#> 5 tod -9.83 (0.20) 11.67 (1.33)

#> 6 seas -9.92 (0.18) 6.20 (0.93)By the sign of the estimates we can for example deduce, that the

existence of the time-of-day electricity price tod in the

contract has a negative effect. However, the deciders are very

heterogeneous here, because the estimated variance of this coefficient

is large. The same holds for the contract length cl. In

particular, the estimated share of the population that prefers to have a

longer contract length equals:

cl_mu <- coef(model_elec)["cl", "mean"]

cl_sd <- sqrt(coef(model_elec)["cl", "var"])

pnorm(cl_mu / cl_sd)

#> [1] 0.317279The correlation between the covariates can be accessed as follows:2

cov_mix(model_elec, cor = TRUE)

#> cl loc wk tod seas

#> cl 1.00000000 0.077721396 0.04462012 -0.03169023 -0.122791071

#> loc 0.07772140 1.000000000 0.79003728 0.09679206 0.003348363

#> wk 0.04462012 0.790037276 1.00000000 0.09449239 -0.034341537

#> tod -0.03169023 0.096792064 0.09449239 1.00000000 0.518190666

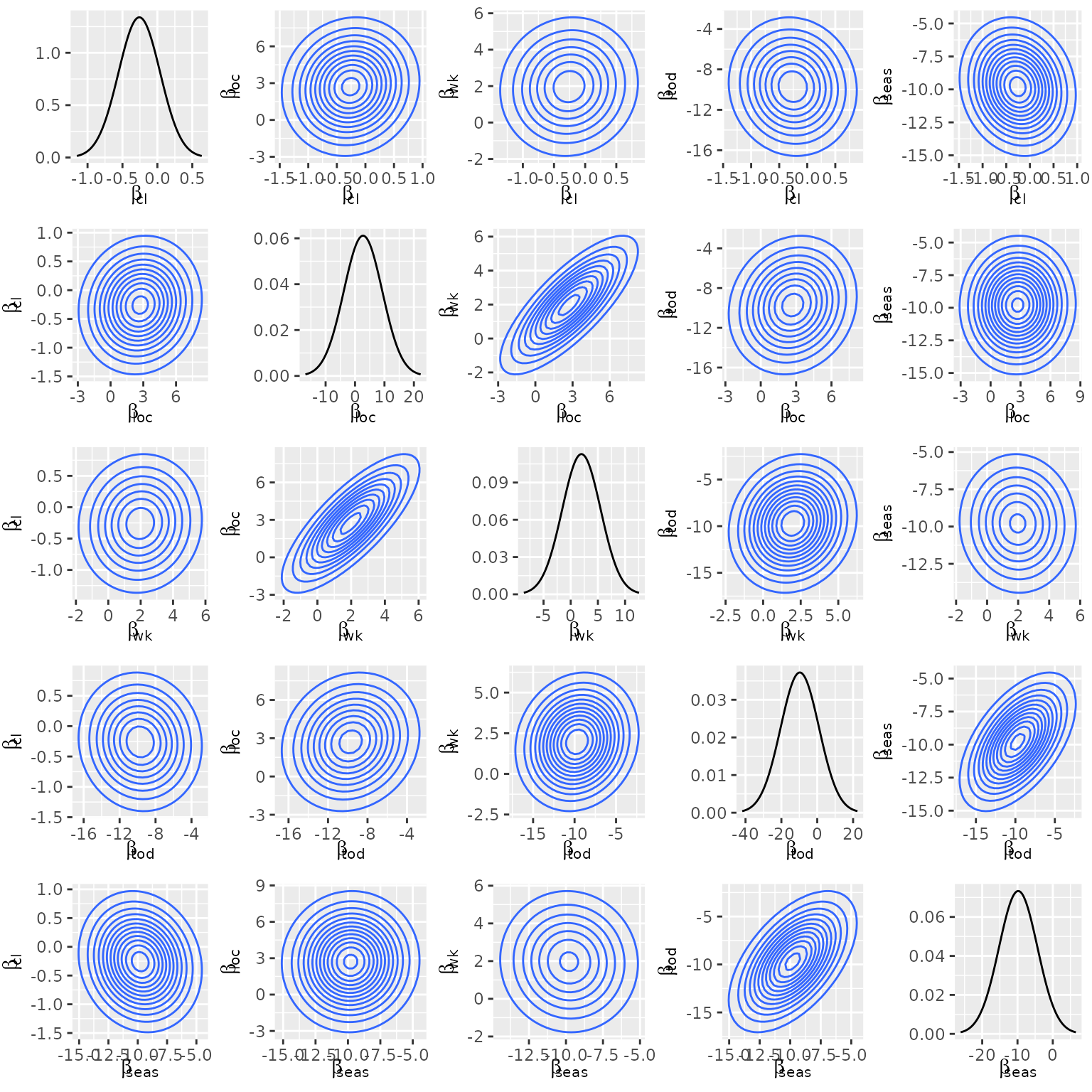

#> seas -0.12279107 0.003348363 -0.03434154 0.51819067 1.000000000Here, we see the confirmation of our initial assumption about a high

correlation between loc and wk. The pairwise

mixing distributions can be visualized via calling the

plot() method with the additional argument

type = mixture:

plot(model_elec, type = "mixture")

Estimating latent classes

More generally, RprobitB allows to specify a Gaussian mixture as the mixing distribution. In particular,

This specification allows for a) a better approximation of the true underlying mixing distribution and b) a preference based classification of the deciders.

To estimate a latent mixture, specify a named list

latent_classes with the following arguments and submit it

to the estimation routine fit_model:

C, the fixed number (greater or equal 1) of latent classes, which is set to 1 per default, 3weight_update, a boolean, set toTRUEfor a weight-based update of the latent classes, see below,dp_update, a boolean, set toTRUEfor a Dirichlet process-based update of the latent classes, see below,Cmax, the maximum number of latent classes, set to10per default.

Weight-based update of the latent classes

The following weight-based updating scheme is analogue to Bauer, Büscher, and Batram (2019) and executed within the burn-in period:

We remove class , if , i.e. if the class weight drops below some threshold . This case indicates that class has a negligible impact on the mixing distribution.

We split class into two classes and , if . This case indicates that class has a high influence on the mixing distribution whose approximation can potentially be improved by increasing the resolution in directions of high variance. Therefore, the class means and of the new classes and are shifted in opposite directions from the class mean of the old class in the direction of the highest variance.

We join two classes and to one class , if , i.e. if the euclidean distance between the class means and drops below some threshold . This case indicates location redundancy which should be repealed. The parameters of are assigned by adding the values of from and and averaging the values for and .

These rules contain choices on the values for , and . The adequate value for depends on the scale of the parameters. Per default, RprobitB sets

epsmin = 0.01,epsmax = 0.7, anddeltamin = 0.1.

These values can be adapted through the latent_class

argument.

Dirichlet process-based update of the latent classes

As an alternative to the weight-based updating scheme to determine the correct number of latent classes, RprobitB implements the Dirichlet process.4 The method allows to add more mixture components to the mixing distribution if needed for a better approximation, see Neal (2000) for a documentation of the general case. The literature offers many representations of the method, including the Chinese Restaurant Process (Aldous 2006), the stick-braking metaphor (Sethuraman 1994), and the Polya Urn model (Blackwell and MacQueen 1973).

In our case, we face the situation to find a distribution that explains the decider-specific coefficients , where is supposed to be a mixture of an unknown number of Gaussian densities, i.e. .

Let denote the class membership of . A priori, the mixture weights are given a Dirichlet prior with concentration parameter , i.e. . Rasmussen (1999) shows that

where denotes the gamma function and the number of elements that are currently allocated to class . Crucially, the last equation is independent of the class weights , yet it still depends on the finite number of latent classes. However, Li, Schofield, and Gönen (2019) shows that

where the notation means excluding the th element. We can let approach infinity to derive:

Note that the allocation probabilities do not sum to 1, instead

The difference to 1 equals

and constitutes the probability that a new cluster for observation is created. Neal (2000) points out that this probability is proportional to the prior parameter : A greater value for encourages the creation of new clusters, a smaller value for increases the probability of an allocation to an already existing class.

In summary, the Dirichlet process updates the allocation of each coefficient vector one at a time, dependent on the other allocations. The number of clusters can theoretically rise to infinity, however, as we delete unoccupied clusters, is bounded by . As a final step after the allocation update, we update the class means and covariance matrices by means of their posterior predictive distribution. The mean and covariance matrix for a new generated cluster is drawn from the prior predictive distribution. The corresponding formulas are given in Li, Schofield, and Gönen (2019).

The Dirichlet process directly integrates into our existing Gibbs

sampler. Given

values, it updated the class means

and class covariance matrices

.

The Dirichlet process updating scheme is implemented in the function

update_classes_dp(). In the following, we give a small

example in the bivariate case P_r = 2. We sample true class

means b_true and class covariance matrices

Omega_true for C_true = 3 true latent

classes.

set.seed(1)

P_r <- 2

C_true <- 3

N <- c(90, 70, 40)

(b_true <- matrix(replicate(C_true, rnorm(P_r)), nrow = P_r, ncol = C_true))

#> [,1] [,2] [,3]

#> [1,] -0.6264538 -0.8356286 0.3295078

#> [2,] 0.1836433 1.5952808 -0.8204684

(Omega_true <- matrix(replicate(C_true, oeli::rwishart(P_r + 1, 0.1 * diag(P_r)), simplify = TRUE),

nrow = P_r * P_r, ncol = C_true

))

#> [,1] [,2] [,3]

#> [1,] 0.3093652 0.14358543 0.2734617

#> [2,] 0.1012729 -0.07444148 -0.1474941

#> [3,] 0.1012729 -0.07444148 -0.1474941

#> [4,] 0.2648235 0.05751780 0.2184029

beta <- c()

for (c in 1:C_true) for (n in 1:N[c]) {

beta_n <- oeli::rmvnorm(

mean = b_true[, c],

Sigma = matrix(Omega_true[, c, drop = F], ncol = P_r)

)

beta <- cbind(beta, beta_n)

}We specify the following prior parameters (for their definition see the vignette on model fitting):

delta <- 0.1

mu_b_0 <- numeric(P_r)

Sigma_b_0 <- diag(P_r)

n_Omega_0 <- P_r + 2

V_Omega_0 <- diag(P_r)Initially, we start with C = 1 latent classes. The class

mean b is set to zero, the covariance matrix

Omega to the identity matrix:

z <- rep(1, ncol(beta))

C <- 1

b <- matrix(0, nrow = P_r, ncol = C)

Omega <- matrix(rep(diag(P_r), C), nrow = P_r * P_r, ncol = C)The following call to update_classes_dp() updates the

latent classes in 100 iterations. Note that we specify the

arguments Cmax and identify_classes. The

former denotes the maximum number of latent classes. This specification

is not a requirement for the Dirichlet process per se, but rather for

its implementation. Knowing the maximum possible class number, we can

allocate the required memory space, which leads to a speed improvement.

We later can verify that we won’t exceed the number of

Cmax = 10 latent classes at any point of the Dirichlet

process. Setting identify_classes = TRUE ensures that the

classes are ordered by their weights in a descending order to ensure

identifiability.

set.seed(1)

R <- 500

C_seq <- numeric(R)

for (r in seq_len(R)) {

dp <- update_classes_dp(

beta = beta, z = z, b = b, Omega = Omega,

delta = delta, mu_b_0 = mu_b_0, Sigma_b_0 = Sigma_b_0,

n_Omega_0 = n_Omega_0, V_Omega_0 = V_Omega_0,

identify_classes = TRUE, Cmax = 10

)

z <- dp$z

b <- dp$b

Omega <- dp$Omega

C_seq[r] <- dp$C

}

table(C_seq)

#> C_seq

#> 1 2 3 4 5 6

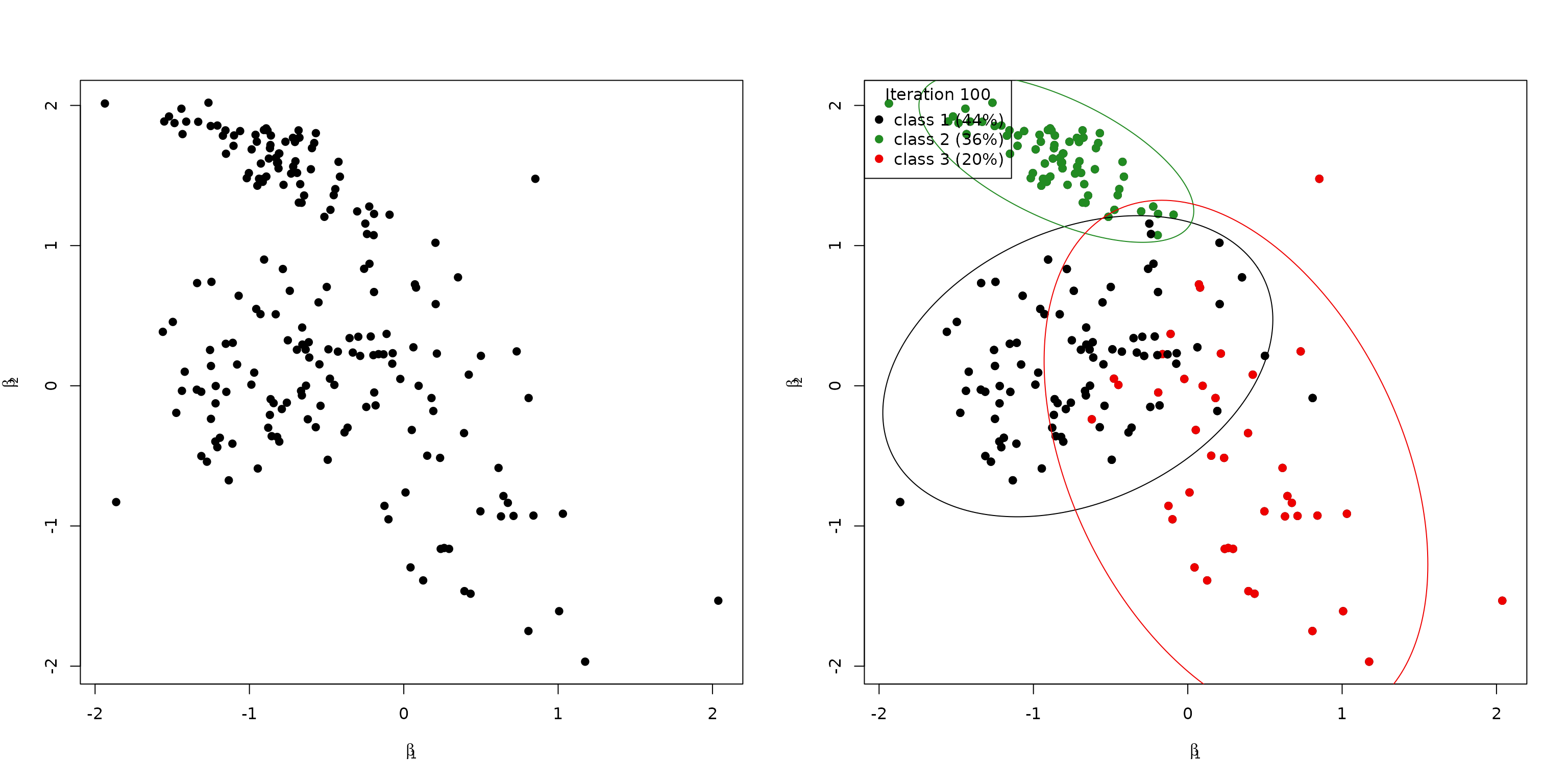

#> 60 59 262 101 16 2The following visualizes the true beta values (left) and

the class allocation in the final iteration (right):

par(mfrow = c(1, 2))

plot(t(beta), xlab = bquote(beta[1]), ylab = bquote(beta[2]), pch = 19)

plot_class_allocation(beta, z, b, Omega, r = R, perc = 0.95)